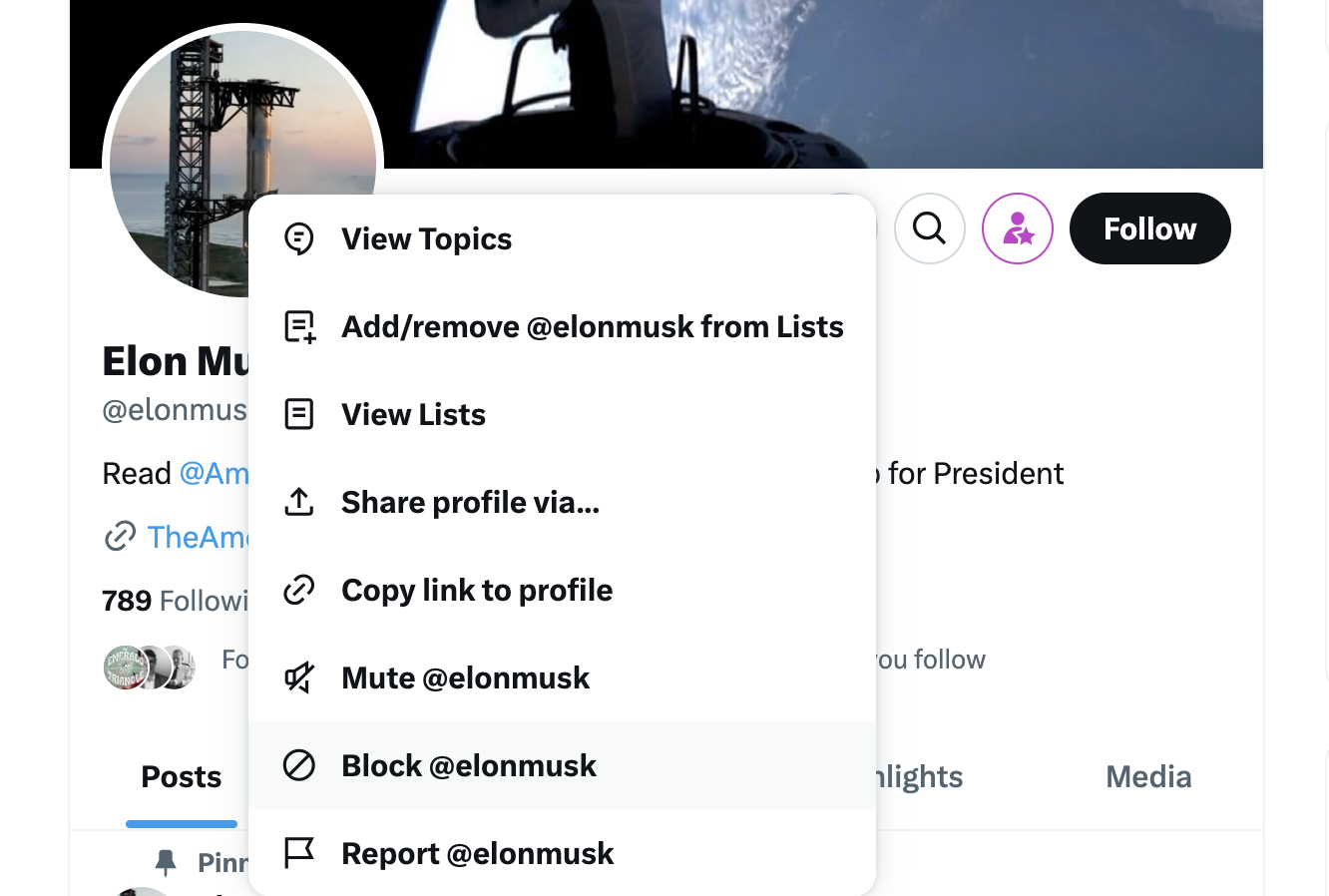

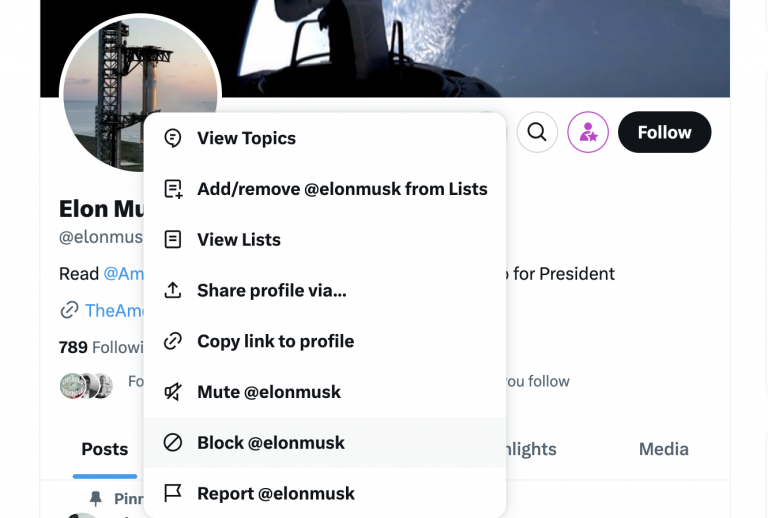

Elon Musk’s X is moving forward with his proposed changes to blocking users, a shift that allows blocked accounts to see a user’s public profile and posts but prevents them from interacting with the content.

Musk, who has long made it known he doesn’t agree with the current blocking and moderation environment online, sees the account blocking standard as ineffectual, with bad actors using alternative accounts or private browsers to get around the block.

The notion is a fragment of the truth. Blocking has never been an effective solution for online harassment. Nor does it guarantee a user’s online safety. But it’s a first defense against bad actors and abusers in online spaces. And, when used in conjunction with more expansive measures and online safety education, it fills out a user’s safe online experience toolbox.

A standard blocking feature is also a required safety component for downloadable social media apps in online marketplaces. So what will happen when it’s gone?

Stalking, harassment, and the rise of deepfakes make blocking necessary

Almost a month after Musk initially posted about the change, X users logged on to find a formal pop-up from the platform alerting users to the change. Many were enraged, calling it a misunderstanding of how blocking is fundamentally used and a move that will only support further stalking and harassment.

Users pointed out that the block button is often the first recourse in situations of mass doxxing or online verbal abuse, like death threats, spurred by a viral post or controversy. Most noted that the new blocking tool makes being online even more difficult for those choosing to keep public accounts, often for professional reasons, essentially forcing users to switch to private profiles if they don’t want previously blocked accounts to view their musings and photos. It’s essential for many in setting online boundaries.

It also means less protections for communities and identities already at risk for harassment. GLAAD’s 2024 Social Media Safety Index, an annual review of online platforms’ protection policies, found that X was the most unsafe platform for LGBTQ users. The same was true in 2023. Advocacy organizations like the Trevor Project frequently recommend at-risk users exercise the ability to block users liberally.

X’s altered blocking features could have even greater repercussions on young users, too, who already face higher risks of abuse on these platforms amid a national mental health crisis.

A report by Thorn, a tech nonprofit combating child sexual abuse, found that teens rely heavily on online safety tools to defend themselves in digital spaces, and are “most likely to use blocking and leverage it as a tool for cutting off contact in an attempt to stop future harassment,” according to the organization.

“Online safety tools serve as a primary defense for minors facing harmful online sexual interactions,” the report reads. “Many youth prefer using tools like blocking, reporting, and muting over seeking help from their offline community. In fact, teenagers are about twice as likely to use these online tools than to talk to someone in their real-life network.”

This reliance on digital features by young users conjures up other issues — an overreliance on piecemeal platform policies, as well as a sense of shame and fear in reporting these interactions to people in real life, to name a few — but that won’t be solved by removing the features altogether.

X is already failing to appropriately address concerns about content moderation on its platform, and has been relying on an opaque policy of “rehabilitating” its offending users. As the amount of AI-generated child sexual abuse material, lamely checked by Big Tech, increases, open access to youth accounts and the media they post is even more concerning.

At large, social media platforms have been under fire for their failures to address online safety and mental well-being. While Musk has brought back some safety and moderation offerings (after gutting them), other social media platforms have leaned more severely into customizable online safety tools, including blocking features. Instagram’s recent “Limits” and “Teen Accounts,” for example, have built up even more barriers between young users and strangers online — it’s parent company Meta has introduced similar restriction across its platforms.

0 Comments